|

Github / BlueSky / Google Scholar / X / Email |

|

|

My interests revolve around the convergence of natural language processing and computer vision, with a focus on gaining insights from human cognition. I am enthusiastic about exploring language grounding within multimodal contexts and investigating the linguistic and cognitive characteristics of models. |

|

|

TL;DR The paper introduces VISOTHELLO, a multi-modal model that plays Othello using both move sequences and board images. Compared to text-only models, it predicts moves more accurately and learns more robust, structured representations, suggesting visual grounding helps language models build stronger world models. |

|

TL;DR We present RAVENEA, a large-scale benchmark with 10K+ human-ranked Wikipedia docs for culture-aware VL tasks. We find retrieval boosts lightweight VLMs, showing the power of cultural augmentation. |

|

TL;DR ChatMotion is introduced, a multimodal multi-agent framework for human motion analysis that dynamically interprets user intent, decomposes complex tasks into meta-tasks, and activates specialized function modules for motion comprehension. |

|

TL;DR Our experiments show that LMs partially converge towards representations isomorphic to those of vision models, subject to dispersion, polysemy, and frequency, which has important implications for both multi-modal processing and the LM understanding debate. |

|

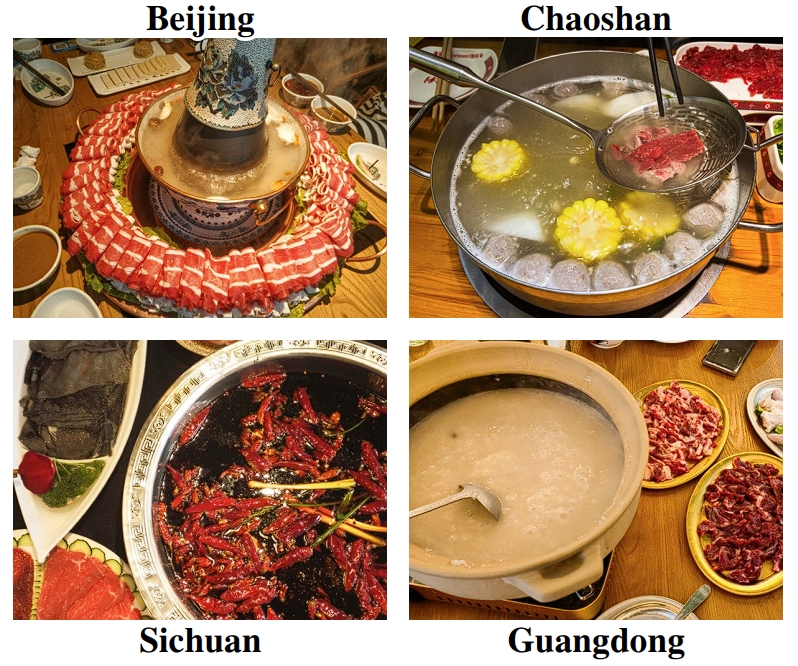

TL;DR In this work, we introduce FoodieQA, a manually curated, fine-grained image-text dataset capturing the intricate features of food cultures across various regions in China, and evaluates vision-language Models (VLMs) and large language models (LLMs) on newly collected, unseen food images and corresponding questions. |

|

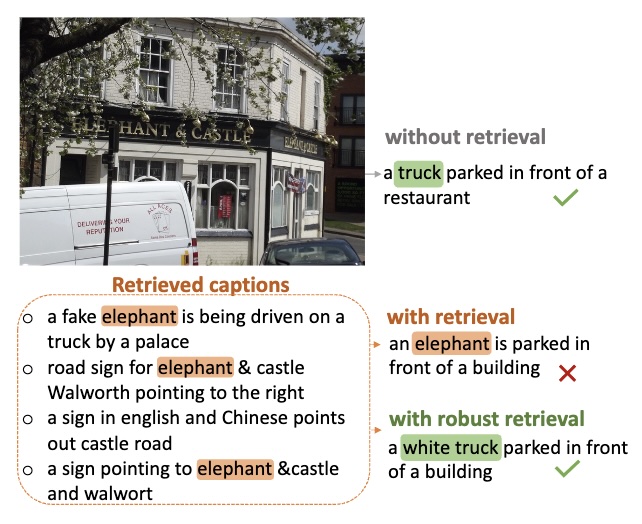

TL;DR We analyze the robustness of a retrieval-augmented captioning model SmallCap and propose to train the model by sampling retrieved captions from more diverse sets, which decreases the chance that the model learns to copy majority tokens, and improves both in-domain and cross-domain performance. |

|

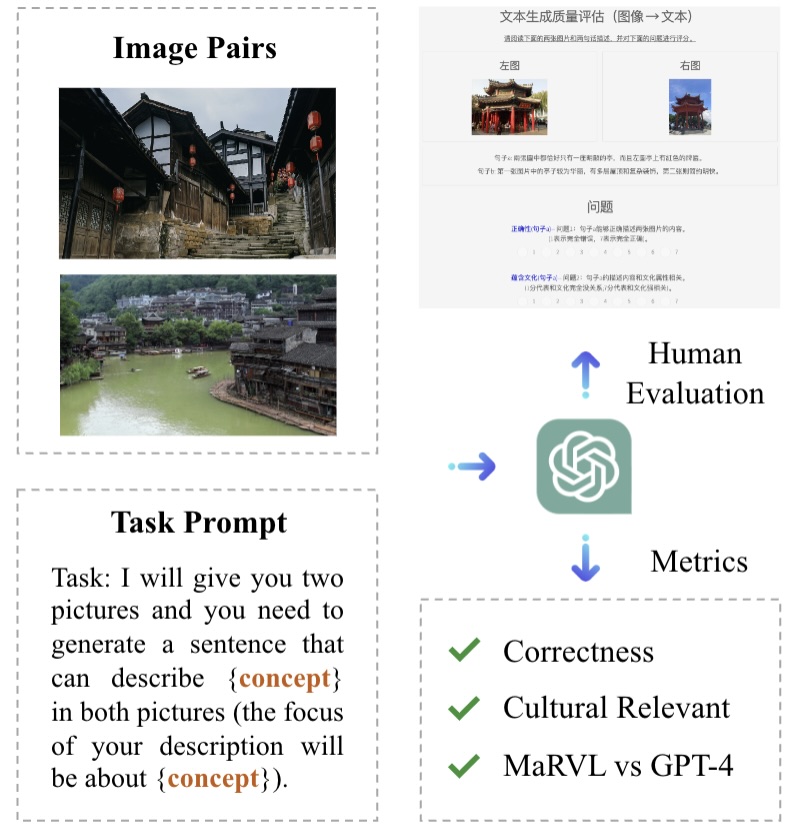

TL;DR We empirically show that GPT-4V excels at identifying cultural concepts but still exhibits weaker performance in low-resource languages, such as Tamil and Swahili, suggesting a promising solution for future visual cultural benchmark construction. |

|

TL;DR This work shows that the larger neural language models get, the more their representations are structurally similar to neural response measurements from brain imaging. |

|

TL;DR We explore the issue of copyright violations and large language models through the lens of verbatim memorization, focusing on possible redistribution of copyrighted text. |

|

TL;DR We probe ChatGPT for its conversational understanding and introduces a conversational framework (protocol) that can be adopted in future studies to assess ChatGPT's ability to generalize, combine features, and to acquire and reason over newly introduced knowledge from human feedback. |

|

|

This page design is based on a template from Jon Barron. Big thanks! © Jiaang Li 2025 |